If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:Īdditionally, a control plane component may have crashed or exited when started by the container runtime. The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled) The HTTP call equal to ‘curl -sSL failed with error: Get “ dial tcp :10248: connect: connection refused. It seems like the kubelet isn’t running or healthy. Waiting for the kubelet to boot up the control plane as sta tic Pods from directory “/etc/kubernetes/manifests”. Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests " Creating static Pod manifest for “kube-scheduler” you will see that the control plane status is unknown. Creating static Pod manifest for “kube-controller-manager” If you lose (or purposely delete) two master nodes, etcd quorum will be lost and this will lead. Creating static Pod manifest for “kube-apiserver” Using manifest folder “/etc/kubernetes/manifests” Writing kubelet configuration to file “/var/lib/kubelet/config.y aml” Writing kubelet environment file with flags to file “/var/lib/ku belet/kubeadm-flags.env” Using kubeconfig folder “/etc/kubernetes” Generating “apiserver-etcd-client” certificate and key When node boots for the first time, the etcd data directory (/var/lib/etcd) is empty, and it will only be populated when etcd is launched. If a node is designated as a worker node, you should not expect etcd to be running on it. Generating “etcd/healthcheck-client” certificate and key Single Node Environment Template: (Cattle/Kubernetes/Swarm/Mesos) Steps to Reproduce: Results: This cluster is currently Provisioning areas that interact directly with it will not be available until the API is ready. Also, etcd will only run on control plane nodes. etcd/peer serving cert is signed for DNS names and IP s Generating “etcd/peer” certificate and key etcd/server serving cert is signed for DNS names and IPs Generating “etcd/server” certificate and key

Generating “etcd/ca” certificate and key etcd, Control Plane, and Worker) should be assigned to a distinct node pool. Generating “front-proxy-client” certificate and key When you are ready to create cluster you have to add node with etcd role. Generating “front-proxy-ca” certificate and key

Generating “apiserver-kubelet-client” certificate and key apiserver serving cert is signed for DNS names and IP s Generating “apiserver” certificate and key Using certificateDir folder “/etc/kubernetes/pki” You can also perform this action in beforehand using ‘kubeadm config images pull’ This might take a minute or two, depending on the speed of your internet connection Pulling images required for setting up a Kubernetes cluster : firewalld is active, please ensure ports are open or your cluster may not function correctly To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. * Done ! kubectl is now configured to use "minikube" * Enabling addons: default-storageclass, storage-provisioner kubelet.resolv-conf =/run/systemd/resolve/nf * Preparing Kubernetes v1.17.3 on Docker 19.03.6. * Running on localhost (CPUs = 2, Memory =2460MB, Disk =145651MB ).

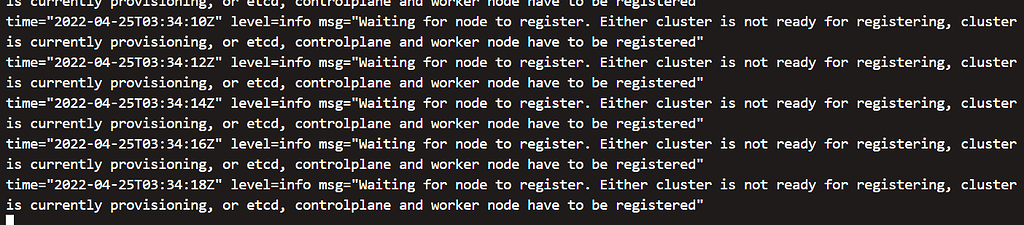

#Waiting for etcd and controlplane nodes to be registered driver#

* Using the none driver based on user configuration

0 kommentar(er)

0 kommentar(er)